Research Achievement Report 2009

Wednesday, February 10, 2010

Reported by Nhung Nguyen, M1, Student ID 80925784

Kiyoki Laboratory, Graduate School of Media and Governance, SFC, Keio University

Email: nhungntt@sfc.keio.ac.jp or sofpast@gmail.com

A semantic metadata extraction for cross-cultural music environments by using culture-based music samples and filtering functions

Abstract

In the field of music retrieval, one of the important issue is that how to extract semantic metadata for culture-based ethnic music rather than only focusing on Western classical music. In this research, we propose the method to extract semantic metadata for several kinds of music. The significant features of our method are (1) normalizing tuning parameters based on music data set in extracting music structural factors, and (2) creating a cultural music filter to generate the music element -emotion impression transformation by using music samples. Finally, weighted impression words are extracted according to each culture from this method. The experimental results demonstrate the feasibility of our method and indicate the challenge for this issue.Motivation

Today, with the booming of digital music, people are prefering to take advantages of online shelf and enjoy music countries across the world (website like Youtube, Myspace). As a result, current studies and researchers are trying to build music search engines based on emotion of users. However, almost were narrowly focusing on pre-20th century Western tonal system (Western classical music and did not care for other kinds of music yet. Furthermore, there are more and more available materials on the Internet about cross-cultural music. As the result, this time is considered as the good time for studying cross-culture in music.Key Problem and Research Goals

Key problems The possible reasons are that there are at least three questions in this filed. One of them is that "Do people of different cultures compose music in a similar way or not?" and the other is that "Does music affect people around the world in the similar ways?" [6]. In addition, from the viewpoint of music retrieval by mood, there is a gap between the richness in meaning that users want to use and the shallowness of content descriptor we can actually compute [5]. Project Goals In this research, we try to build a semantic metadata extractor for culture-based ethnic music to compute emotion/impression which is implied in music of each culture in its own way. To deal with above-mentioned questions, this system for cross-cultural music environments is constructed by music samples and music filtering, inspired by Computational Media Aesthetic CMA approach [5]. This approach treats how music was made and built and interprets the media data with its maker's eyes. Finally, the semantic metadata are extracted in the emotional layer (sad, happy, dreamy and so on).Basic Method

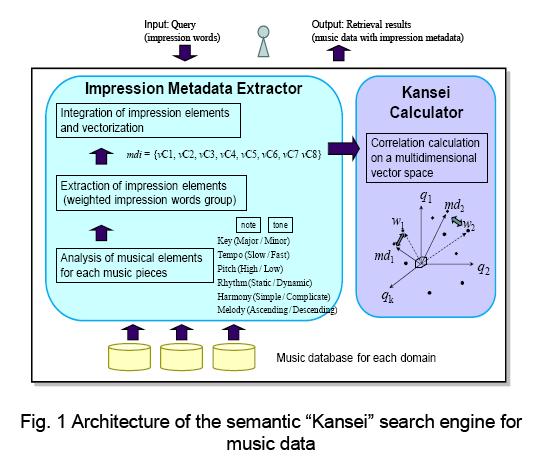

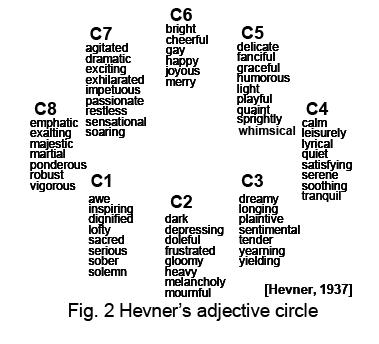

A. Semantic "Kansei" search engine for multimedia data of Western classical music In the current researches on music information retrieval (MIR), several authors have attempted to study strong correlations between emotion impression and various musical elements with lots of emotion detection methods. In the field, a fundamental framework for MIDI music data retrieval has been proposed by using Media-lexico transformation operator [4]. Metadata extracted from that system could be divided into two layers, which were musical element layer and emotion impression layer (Fig. 1). For the extraction of emotional impressions from music data, the framework is constructed based on Kate Hevner's adjective checklist, in which those emotions were represented by 65 adjectives and grouped into 8 main categories (dignified, sad, dreamy, serene, graceful, happy, exciting and vigorous) (Fig. 2). In addition, 6 basic musical elements of music structure as the result of Hevner's experiments were extracted which were key, tempo, pitch, rhythm, harmony and melody[1][2]. To calculate the relationship between musical elements and impression words, a media-lexico transformation operator is introduced. By the operator, the major key, fast tempo and high pitch are strongly related to happy and graceful feeling while sad or dreamy feeling usually are performed in the minor mode with the slow tempo and lower pitches. Rhythm, harmony and melody are also mapped to the operator with the association with each certain kind of emotion in Hevner's adjective checklist. From the experimental results in the previous research [4], the operator could automatically detect emotions in the Western music which were represented by a set of impression words with intuitively acceptable weights for MIDI music files

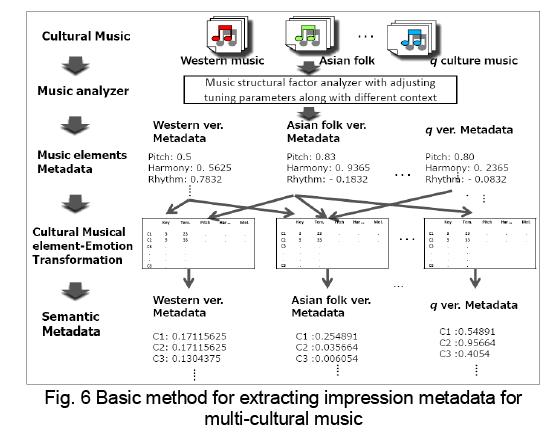

B. Impression metadata extraction for multi-cultural music In order to deal with several music cultures, two requirements in extracting impression metadata were raised as: (1) how to extract music structural factors when the context of the culture-based music was changed and (2) how to generate several transformation matrices T to transform music elements to weighted impression words for different cultures. The Fig. 6 depicts our basic idea to solve these requirements.

- By adjusting tuning parameters, music structural factors are extracted along with the characteristic of each music culture. These parameters are different from those of other cultures.

- Inspired by CMA approach, by using music samples and music filter function, several music E-I elements - emotion impression transformations are generated with responded to the context change from a culture to another one. Each transformation reflects the relationship between musical elements and human feelings of a certain culture; hence it is also different among cultures across the music world.

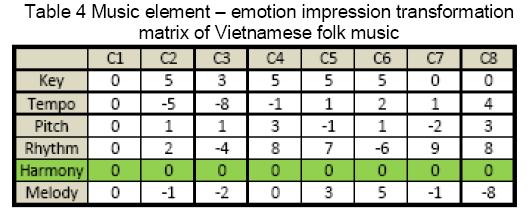

Generate Music element - Emotion transformation for each culture Basic idea to create transformation matrices is proposed that are using music samples and creating a music filter basing on sample music and CMA view point.

- Step 1: Typical music samples are seclected from many music of a culture. Then values of these music are extracted and used as the first version of transformation matrix.

- Step 2: Music filter is created to emphasized on musical elements which are more important than other in a culture-based music. For instance, in Asian folk music, because of lack of semi-tone, harmony element is not important in expressing emotion implied in music. Therefore, music filter can omit harmony when calculating the relationship between musical elements and emotion impression.

- Step 3: Manual modification is in order to help improve the precision of transformation matrix. The rule to modify that table is based on above CMA viewpoint. From a enought bunch of music, the rule how was music made of a culture-based music could be realized. Relying on that perspective, we can modify to values of that table as the way composer makes music.

Experiments

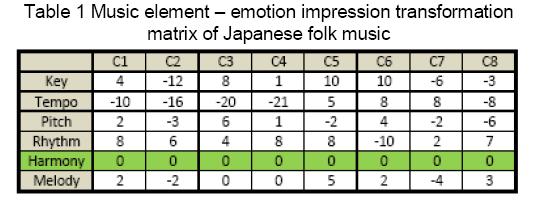

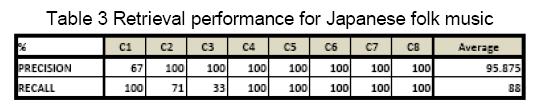

We did experiements with Janapese folk music, Vietnamese folk music and Russian folk music. The result with Japanese folk music wasa listed as followingExperiment 1: Japanese folk music

28 Japanese folk music were collected and the above method was applied. The transformation matrix was created for Japanese folk music as following

We also did the experiment in retrieving music from that system and the precision was calculated based on 2 parameters recall and precision as following

For instance, a user wants to find a song with keyword "sad" and then our system gives them a result with a list of music both "right sad" songs and "not right sad" songs.

- Recall: is "How many music are sad but the system detected "not sad" or system could not detected and put its in the list"

- Precision: is "How many music are sad and the system detected sad in the list"

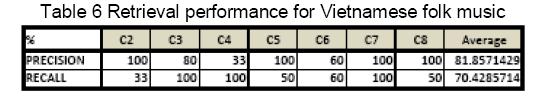

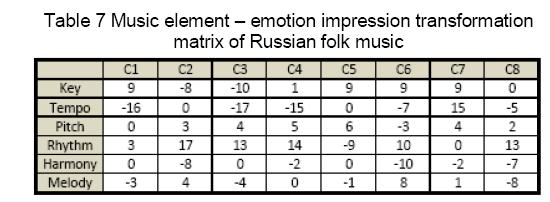

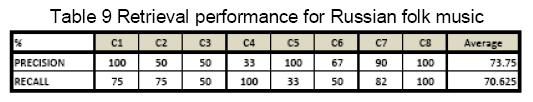

Experiment 2&3: Vietnamese and Russian folk music

The same works did with Vietnamese folk music and Russian folk music. The result are listed as following

Comparision between above culture-based musics

- Both Vietnamese folk music and Japanese folk music used the pentatonic system so harmony element is not important in these music.

- In Vietnamese folk music almost are sad (C2), dreammy (C3) or happy (C5) and C6, a few music is belonged to C7 or C8. Furthermore, there is no C1 in Vietnamese folk music.

- Almost music in Russian folk music are in exciting C7 categories. All 6 musical structure elements effect on expressing emotion in Russian music.

- The way to perform emotion in above countries are different. For instance, sad song in Vietnam can be played in major key, but in Russia or Japan it's usually played in minor mode.

Discussion

After doing experiments there some points were realized. (1) First of all, the number of music data set was very important in realizing the rule of how was music made. The transformation matrix could be more correctly when the number of collected music was increased. (2) Secondly, the method was done with supporting of manual method thus in some cases it was not flexible. (3) One more thing raised was that not all 6 music elements of Western classical music affected on the emotion impression of another cultural music. As the result, some lessons were learned for our work.- Extent data set is very important to improve the result and find the rule of how was music made.

- Automatic system need to be required to make extracting metadata more flexible.

- Extent the number of music features becomes necessary. 6 above music structural features are corresponded with Western classical music, in other kinds of music it's possible that there are other music factors affecting on expressing emotion.

Future work

This task did with the supporting of manual method, the future work is trying to make this system become completely automatic system and doing above works in discussion.Publication

In this year, we also publised this method on WASET conference at Tokyo and we are waiting the acceptant notification until 28th of February.- Nhung Nguyen, Shiori Sasaki, Asako Uraki, Yasushi Kiyoki. "A semantic metadata extraction for cross-cultural music environments by using culture-based music samples and filtering functions". February 2010.

Reference

- K. Hevner, "Expression in music: a discussion of experimental studies and theories" Psychological Review, Vol.42, pp.186-204, 1935.

- K. Hevner, "Experimental studies of the elements of expression in music" American Journal of Psychology, Vol.48, pp.246-268, 1936.

- K. Hevner, "The affective value of pitch and tempo in music" American Journal of Psychology, Vol.49, pp.621-630, 1937.

- T. Kitagawa, Y. Kiyoki, "Fundamental Framework for Media Data Retrieval Systems using Media-lexico Transformation Operator in the case of musical MIDI data", Information Modeling and Knowledge Bases XII, IOS Press, 2001

- Chitra Dorai, Svetha Venkatesh, Computational Media Aesthetics: Finding Meaning Beautiful, IEEE MultiMedia, Pages: 10 - 12, Year of Publication: 2001.

- Jackson, W. H., Cross-Cultural Perception & Structure of Music, 1998

Nhung Nguyen

Kiyoki Laboratory, SFC