Culture-Dependent

Features Computation Model

for Emotion-based

Image Retrieval

Student Name:

Totok Suhardijanto (Doctor

Student 2nd Year)

Supervisor:

Prof. Kiyoki Yasushi

Student ID:

80849341

Abstract

In this

research, we developed a model for computing cultural contents in an image

retrieval system. The key point in the model is the mechanism in providing the

more accurate information about relationship between color and cultural related

emotion aspects in two cultures or more (C1, C2 … Cn). Generally

speaking, in the research area of information retrieval, it is still difficult

to consider cultural related features into an image search system. Current image

retrieval systems are developed based on low-level features such as texture,

shape, color and position. High-level features such as face recognition,

emotions and culture-differences still remain difficult to be used in more

open-ended task of image retrieval systems.

In this

research, we designed an image retrieval system for a specific domain that is

dealing with color and emotion related features. We implemented a method that

allows an information retrieval system to deal with image based on

culture-dependent color-emotion association. A vector space model was

implemented in this system to create a culture oriented computation that is more

feasible to deal with culture related information across cultures. In order to

realizing this system, image color features and a given culture related

color-emotion characteristics converted into vectored numeric data, then stored

as metadata. By using similarity and weighting computation, the system provides

dynamic information of the association between image color and cultural

emotion.

Background

In

recent days, the computer research community began to consider cultural contents

into their research [12]. One of the fields that attract more attention for the

research community is image retrieval research. Nowadays, there are large

quantities of images and visual information available. These images are existing

in structured collections (e.g. museum collections) or independent (e.g. images

found in Web pages in the form of individuals’ photographs, logos, and so on)

[4]. Collection of images became vary not only in number, but also in types

because nowadays people with different cultural backgrounds enable to share

their images over the world. Along with the trend of cross-cultural

communication issues, an image retrieval system that enables to take

culture-dependent issue into consider has more challenges in near future to

provide better ways and approaches in dealing with culture-dependent images.

With

regard to culture-dependent image retrieval, the issue of association between

image color and emotions or impression is essential. This issue has already been

addressed for many years. It has been attracting many scholars from various

areas of studies. The color emotion oriented image retrieval system has been

already proposed in number of researches [5], [6], [10], [11]. Current computer vision techniques allow us to extract

automatically low-level features of images, such as color, texture, shape and

spatial location of image elements, but it is difficult to extract high level

features automatically, such as names of objects, scenes, behaviors and emotions

[7]. Current image retrieval still has semantic gap in dealing with cross

cultural environments [2], [3]. Although several emotion based image retrieval

(EBIR) systems and emotion semantic image research (ESIR) systems have already

been proposed in [7], [10], [11], these systems have not addressed issues yet

for culture-dependent emotion. In our approaches, we consider cultural features

of color and emotion association as one of key point for assessing cultural

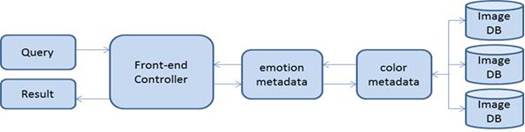

differences in image retrieval system. Figures 1 shows the difference between

the previous model and our model.

EBIR/ESIR

(Emotion-Based Image Retrieval/Emotion-Semantic Image Retrieval) System

Overview

Our

Culture-Dependent Emotion-Based Image Retrieval (CD-EBIR) System

Overview

Figure

2: The Architecture Comparison between ESIR/EBIR and our

CD-EBIR

Objective

In

this research we developed a culture-dependent color emotion model for

cross-culture oriented image retrieval system that realizes color emotion spaces

to search images with human emotion aspects. Our system created color impression

spaces based on Plutchick Model of emotion [8], [9].

We created our method based on the theory of color psychology that approaches

color and emotion association according to basic features of emotion and color.

With

regard to the multicultural reality of our world, there are also always slight

differences of color and emotion association across cultures. This approach

makes our method to be more similar to cross-cultural interaction in natural

way. In the natural cross-cultural interaction, there is always a slight

difference in color and emotion association among cultures. A color that means

bravery in one culture could have different impression in another culture.

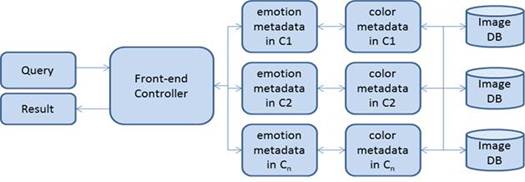

Figure 2 illustrates the core system in the proposed model.

Figure

2: Core System (Culture-Dependent Emotion-Based Image Retrieval (CD-EBIR)

Model)

We

generated culture-dependent emotion based image metadata through these following

steps. First, color features are extracted using 3-D Color Vector Quantization

of RGB color space [1]. In this step, color features are converted into RGB

color space. Separately, culture-dependent emotion features are acquired from

cultural knowledge through a survey that is addressed to respondents from three

different cultures including Vietnamese, Indonesian and Japanese. Respondents

were asked to indicate their emotion or impression based on Plutchik model of emotion [8], [9] that associates to

certain colors. This survey result is used for creating culture-dependent color

semantic metadata.

After

the culture-dependent emotion information is obtained, this emotion feature is

then represented as a vector to make it possible to map onto the RGB color space

to correlate with nearest colors. Because a particular color can associate with

different emotions and vice versa, in order to generate dynamically

representative colors of emotion, we use automatic clustering method. After

representative colors are chosen and irrelevant colors are excluded, we used

them in creating culture-dependent color emotion metadata. In our

culture-dependent emotion-based image retrieval, the query emotion is processed

and mapped onto the color-emotion space. We implemented feature weighting and

merging processing in order to measure the similarity for selecting the result.

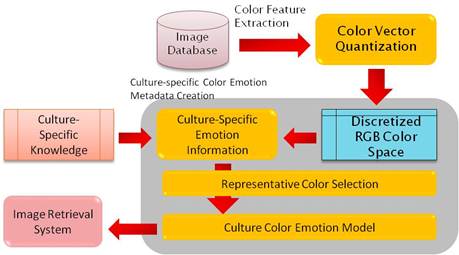

Figure 3 shows the system architecture of the proposed

system.

Figure

3 The Architecture of CD-EBIR

System

In

order to obtain culture information of color and emotion, we designed and

distributed survey to subjects in three different cultures including Japanese,

Vietnamese, and Indonesian. According to this information, we created metadata

repository of culture-oriented emotion features. Separately we developed a

culture-oriented image feature metadata by extracting color features from a

cultural image such as painting. Based on culture-oriented emotion and color

feature metadata, we created a color-emotion space. We map color and emotion

features onto space to make it possible to measure closest distance between the

features based on the similarity and weight computation. A culture-dependent

emotion-color subspace is implemented to reduce the result lists and to select a

particular culture-oriented features that are closely related to the query. An

automatic merging system is applied to integrate the result lists of collection.

This step also involves performance analysis of the experimental results to

measure performance and quality of the system. We implemented all the components

of our technique and evaluated the prototype in a small scale dataset. The

experimental dataset consists of 1000 images.

Result

Our

culture-dependent emotion-based image retrieval system shows an ability to deal

with image search in three different cultures. This system includes three core

components, that is, color and emotion-space, culture-specific subspace, and

automatic weighting function. In the color-emotion space, the query is processed

by analyzing its emotion-related features and computing it through automatic

feature weighting to correlate them with the correspondent color features. The

information of correlation between emotion and color features are useful to

retrieve a set of images that match to the query. A culture specific subspace

which is implemented is also useful to select a culture that is targeted by the

query. The output of this system is provided into lists of images that are

related to a specific culture.

For

the experimental studies, we implemented our system to image datasets containing

Japanese and Indonesian painting images. We designed a database of Japanese

Ukiyo-e based on Tokyo National Gallery Database and

Indonesian Balinese paintings. The experimental results show that our system can

select and find out a set of culture-oriented emotion-based images by submitting

query with emotion and impression keywords.

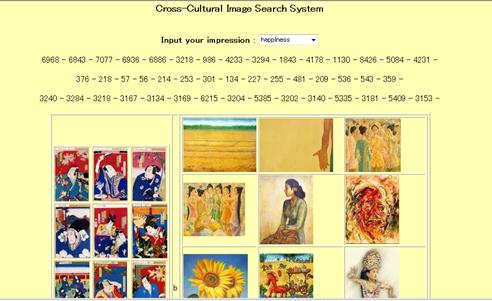

Figure

3 Our Web-based Cultural-Dependent Image Search System

In

comparison to human impression based selecting result, the precision rate in our

system is still under 70%. It is still difficult to distinguish colors based on

a set of closest emotion and impression such as joy, contentment, and

satisfaction. However, our system can indicate a set of emotion related colors

in specific culture. For instance, Japanese and Vietnamese happiness relate to a

set of colors between red and blue, whereas Indonesian happiness refers to a set

of colors between yellow and green.

References

[1]

Barakbah, A.R. and Y. Kiyoki.

3D-Color Vector Quantization for Image Retrieval Systems, International Database Forum (iDB),

Iizaka, Japan, 2008.

[2] Semeulders, A.W.M. Content-Based image

retrieval at the end of early years. IEEE Trans. On Pattern Analysis and Machine

Intelligence 22, 12, 2000, pp.

1349-1380

[3]

Zhao, R., William, I. and Grosky.

Bridging the semantic gap in image retrieval. Distributed multimedia databases:

techniques & applications. Idea Group

Publishing Hershey PA USA, 2002, 14-36.

[4]

Kherfi, M.L., Ziou, D. and

Bernardi, A. Image Retrieval From the World Wide Web: Issues, Techniques, and

Systems. ACM Computing Surveys, Vol.

36, No. 1, March 2004, pp. 35–67.

[5]

Sasaki, S., Itabashi, Y., Kiyoki,

Y. and Chen, X. An Image-Query Creation Method for Representing Impression by

Color-based Combination of Multiple Images. Frontiers in Artificial Intelligence and

Applications; Vol. 190, Proceeding of the 2009 Conference on Information

Modelling and Knowledge Bases XX, 2009.

[6] Wang, S. and Wang, X. Emotion

Semantics Image Retrieval: A Brief Overview. ACII 2005, LCNS 3784, 2005, pp.

490-497.

[7] Wang, W.N. and Yu, Y.L. Image

emotional semantic query based on color semantic description. Proceedings of ICMLC 2005, 2005, pp.

4571-4576.

[8] Plutchik, R. Emotion: Psychoevolutionary Synthesis.

New York: Harper, 1980.

[9] Plutchik, R. Emotions and Life: Perspective from

Psychology, Biology, and Evolution. Washington D.C.: American Psychology

Associations, 2003.

[10] Wang, W.N and He, Q.H. A Survey on Emotional

Image Retrieval. Proceeding of ICIP

2008, 2008, pp. 117-120.

[11] Solli, M. and Lenz, R. Color Based

Bags-of-Emotions. CAIP 2009, LCNS

5702, 2009, pp. 573-580.

[12] Tosa, N., Matsuoka, S., Ellis, B., Ueda, H. and

Nakatsu, R. ‘Cultural computing with context aware application: ZENetic

computer’, Lecture Notes in Computer

Science, Vol. 3711, pp.13–23, 2010.