Taikichiro Mori Memorial Research Grant Report 2014

A Cross-Cultural System for Human’s Emotions Detection through Impression of Music and Pictures for Interaction between Japan and Europe

Tatiana Endrjukaite,

PhD Student 2nd year,

Graduate School of Media and Governance

Abstract

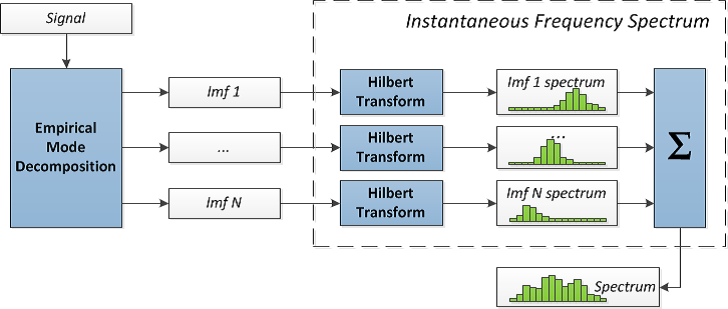

In this research I study dependency between intrinsic characteristics of tunes, pictures and emotions people experience while listening to those tunes and watching images with respect to different cultures. The feature of this research is that it explores cultural differences through determining people’s emotions by means of music and images combinations. The objective of this research is to construct a system for determining the expected emotional effect that a person of a specified culture may have while looking at a picture and listening to a tune. Proposed research uses my achievements from my previous papers: T. Endrjukaite, Y. Kiyoki. Musical Tunes Emotions Identification System by means of Intrinsic Musical Characteristics. In proc. of International European Japanese Conference 2014. Moreover, for calculating significant internal features of music pieces I have used instantaneous frequency spectrum (IFS), which was originally introduced by me in the paper “Time-dependent Genre Recognition by means of Instantaneous Frequency Spectrum based on Hilbert-Huang Transform”. T. Endrjukaite and N. Kosugi. In Proc. of iiWAS 2012.

Introduction

At present, there is no complete theory for automatically analyzing music and image structure. However, there are encouraging researches on music and image signal processing. Detecting the most frequently appearing component in a piece of music based on music structure analysis and object recognition based on measuring similarities between shapes in pictures.

The main motivation of this research is to determine cross-cultural conversion mechanism which is used to represent multimedia of one culture in a context of another culture, for maximal understanding of cultural diversity. Such mechanism would lead to a better interaction and communication in cross-cultural environment. The relation between musical sounds, images, and their influence on the person’s emotion were studied by many scientists through experiments, which substantiated a hypothesis that music and pictures inherently carry emotional meaning.

Background

In one of my previous researches about time-dependent genres recognition I introduced a new method of signal processing: the Instantaneous Frequency Spectrum (IFS). This IFS method was used as a base for genres recognition, and thus demonstrated its effectiveness in tunes description. Time-dependent genres recognition, discussed in that paper, gives a precise description of a tune’s internal structure, which helps to enhance understanding of the music. IFS is used as an important sound feature in the current work.

|

|

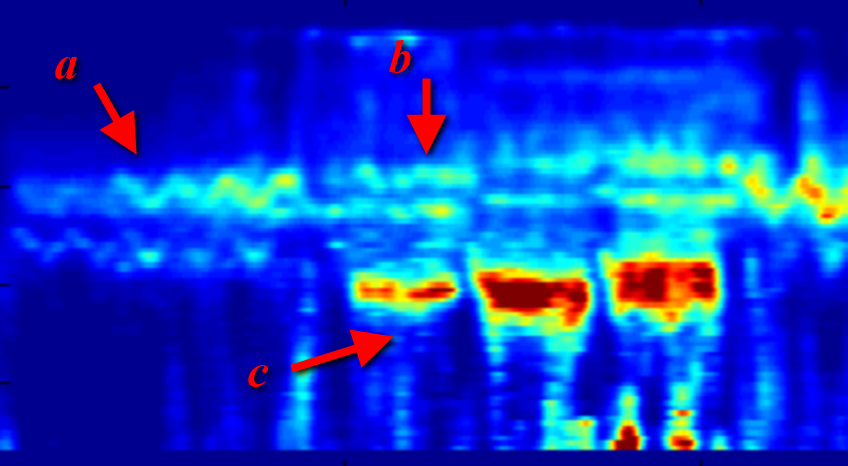

Figure 1. Left: Instantaneous Frequency Spectrum. Right: revealed internal structures: a) introduction fade-in, b) two voices in parallel, c) periodic parts of rhythmic music.

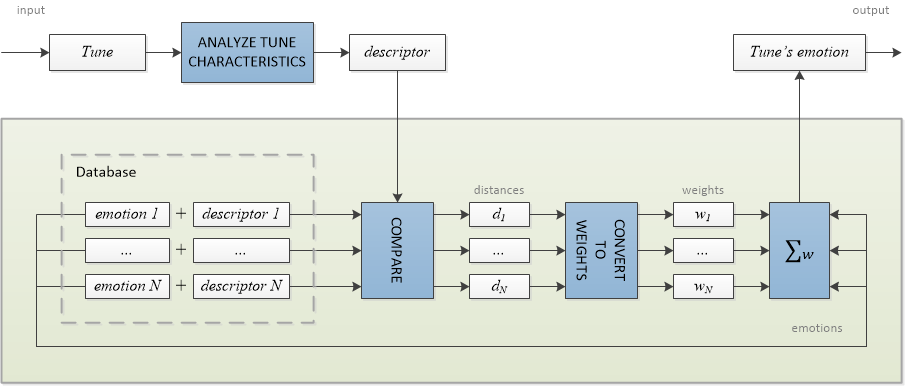

In another research I designed and implemented a music-tune analysis system to realize automatic emotion identification and prediction by means of intrinsic musical features. To compute physical elements of music pieces I defined three significant tunes parameters: repeated parts inside a tune, thumbnail of a music piece, and homogeneity pattern of a tune. They are significant, because they are related to how people perceive music pieces. By means of these three parameters it is possible to express the essential features of emotional aspects of each music piece. I have used the approach of that paper in the current work.

Figure 2. The tune emotion detection system workflow.

Objectives

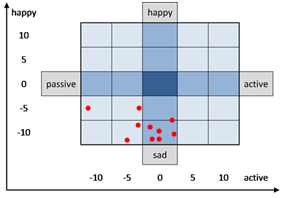

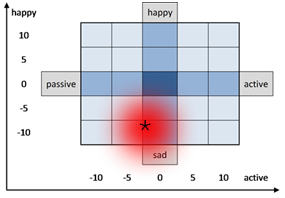

In current research I was working on a cross-cultural system for human’s emotions detection which is a new computational environment, oriented to support cross-cultural understanding and communication through the use of multimedia. This research provides a new platform for exploring cultural differences by analysing emotions and impressions from tunes and images perception. I took into account human’s emotions characteristics based on Hevner’s music psychological researches, which I generalized into emotional plane as presented in figure 3. This generalized method was used for image’s and tune’s emotion identification. Such representation gives outstanding opportunities for calculating and estimating emotions continuously even with adjacent values, because we can easily calculate mathematical expectation of emotion and its variance on this plane.

|

|

|

a) multiple emotions |

b) emotion’s generalized result |

Figure 3. Innovative emotion presentation plane

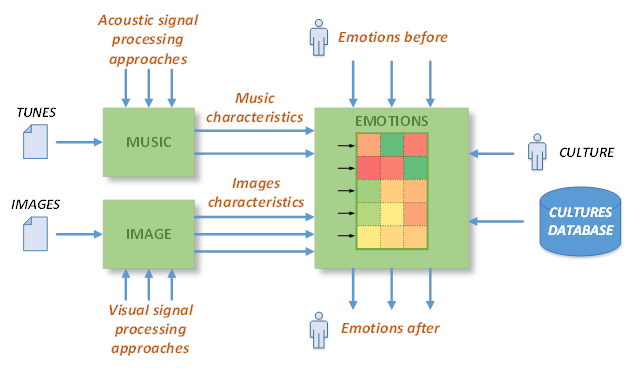

The cross-cultural system is characterized by two main parts. The first part is a culture-dependent semantic metadata-extraction part which extracts tunes and images characteristics as well as impression description (e.g., sad, happy, dreamy, lyrical, playful, emphatic, light and so on) for each culture. The second part is a cross-cultural conversion mechanism that is used to represent multimedia of one culture in a context of another culture, for maximal understanding of cultural diversity, and better interaction and communication in cross-cultural environment.

During experiments I took into account human’s emotions characteristics that were collected before and after the experiments where probationers perceive multimedia data. The most important parameter was the culture of all participants. Four cultures were taken for this research: Japanese, Latvian, Italian and Western Russian. However, other significant parameters such as age of the participants, place where an experiment is taken, and the social aspect (alone, in the company of friends, and other) were taken into account as well. Comparing data collected before and after every experiment changes in humans emotions that caused by the experiment were determined. Following analysis was dedicated to finding similar musical and visual characteristics among tunes and pictures that cause similar emotional changes on listeners. This analysis was conducted by means of approaches introduced in my previous researches as well as by a widely used correlation analysis.

Figure 4. System architecture: interaction between multimedia processing modules and emotion estimation module.

As a result, I got probabilities of emotional changes by every multimedia characteristic for every culture that was taken into account. The system allows us to calculate and estimate the expected emotional effect of any tune and image up to 72%. This result gives an opportunity to study differences in multimedia perception by people of different cultures. That will help in studying the cultural differences which in turn will lead to better cross-cultural understanding.

In future work I am planning to optimize system parameters to reach the highest possible accuracy. Within that we are planning to improve IFS spectrums comparison by taking into account human ear specifics. As another direction we are planning to modify and extend the system for researching on how video data may affect the emotions when shown alongside with the music.

Results

Achieved results are presented below.

International Journal

1) T.Endrjukaite, Y.Kiyoki. Emotion Identification System for Musical Tunes based on Characteristics of Acoustic Signal Data. Information Modelling and Knowledge Bases XXV, IOS Press, September 2014.

International Conference

2) T.Endrjukaite, Y.Kiyoki. A Cross-Cultural System for Human’s Emotions Detection through Impression of Music and Pictures for Interaction between Japan and Europe. ACM Multimedia 2015, under submission.